Real-time construction data APIs are transforming how developers build tools for the construction industry. These APIs provide instant access to live project data – like schedules, budgets, and equipment status – enabling faster decisions and smoother operations. By leveraging IoT sensors, cloud computing, and AI, these APIs ensure constant data flow, eliminating delays seen in older systems.

Key Takeaways:

- What They Do: APIs sync live construction data, covering tasks, costs, materials, and more.

- Why It Matters: Instant insights improve project efficiency and reduce errors.

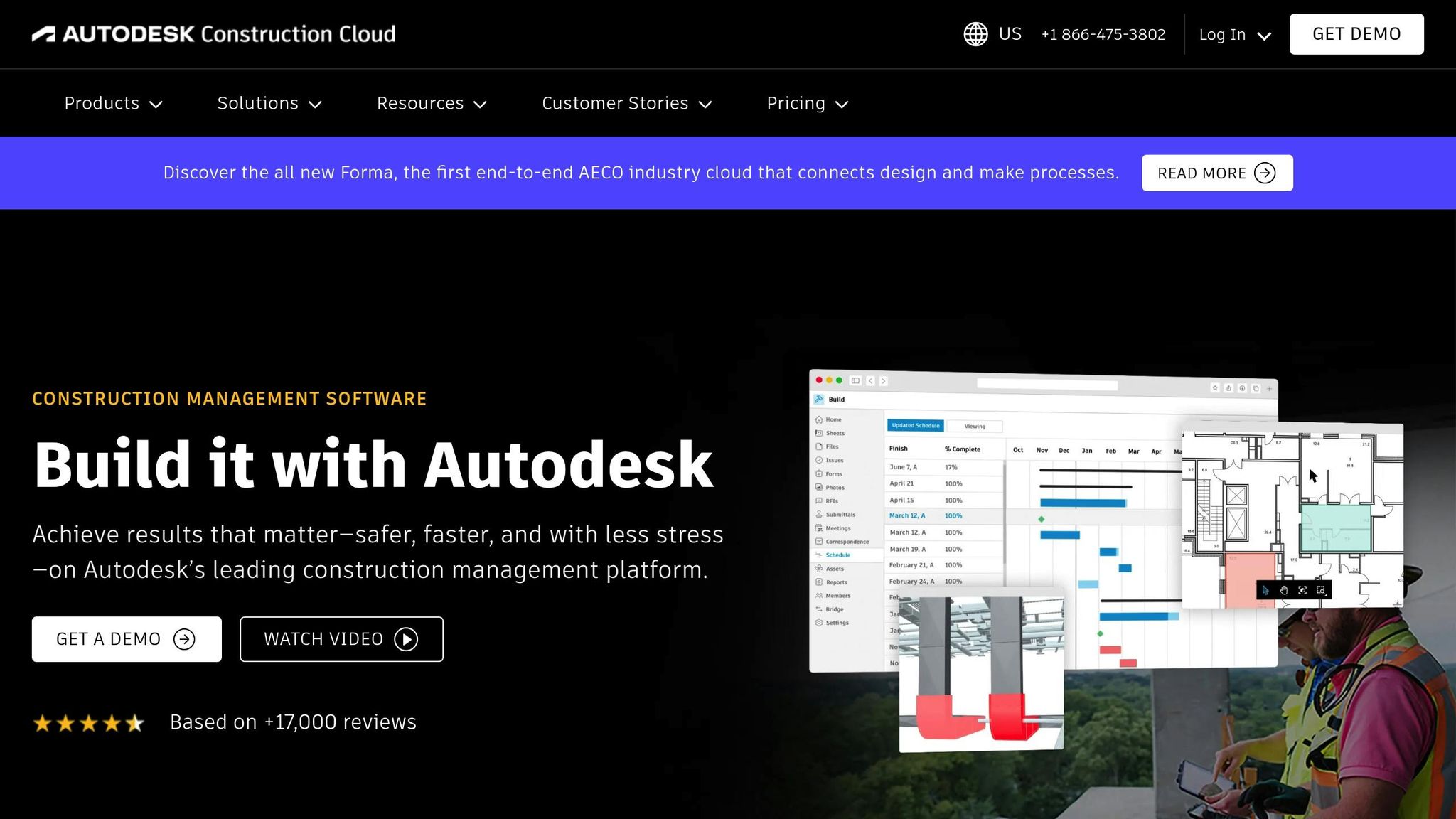

- Tools to Use: Platforms like Procore and Autodesk offer APIs with robust integration options.

- Challenges to Address: Developers must handle security, scalability, and real-time synchronization.

- Best Practices: Use secure authentication (OAuth2), test thoroughly with tools like Postman, and optimize systems for performance and growth.

For developers, these APIs open new opportunities to build scalable, real-time solutions tailored to the $10.8 billion construction tech market. Whether you’re creating automation tools or predictive analytics, mastering integration processes and tools is key to success.

Connect to the Autodesk Construction Cloud API through Visual Studio

Prerequisites and Tools for Integration

Before diving into the integration of real-time construction data APIs, it’s crucial to establish a strong groundwork. Having the right credentials, tools, and compliance measures in place can save you from headaches down the line. Here’s what you need to ensure a smooth integration process.

Core Prerequisites for API Integration

API Credentials and Authentication Setup: Most construction platforms rely on OAuth2 authentication or API keys for access. For example, Autodesk Platform Services (APS) requires you to register your application and obtain client credentials. These credentials grant access to specific scopes, such as account administration, file management, or cost management. Autodesk offers a range of APIs tailored for different functions [1][3].

Access Permissions and Scopes: Construction APIs often use role-based access control (RBAC) to define what data your application can access. Clearly specify the project data, user information, and system functions your tool will need. For instance, if you’re building a cost management feature, you might limit access to financial data while excluding workflows like RFIs.

Development Environment Setup: Create separate environments for development, staging, and production. This separation is essential when working with live project data, as mistakes could disrupt construction schedules or budgets. Many platforms provide sandbox environments to test your integrations without affecting real-world data.

Network and Security Configuration: Since construction data often includes sensitive information, security is paramount. Use TLS 1.3 for secure data transmission and AES-256 encryption to protect data at rest [11].

Once these prerequisites are met, you can move on to the tools that make integration efficient.

Recommended Developer Tools

With your credentials, environments, and security measures in place, the right tools can simplify the integration process.

API Testing and Documentation Tools: Postman is a go-to tool for testing APIs. It allows you to test endpoints, manage authentication tokens, and even automate test suites. Additionally, understanding Swagger/OpenAPI documentation will help you navigate most construction API endpoints and data structures.

Construction-Specific SDKs: Autodesk offers SDKs packed with features like compilers, code samples, libraries, and debugging tools. These SDKs are designed to streamline tasks such as file uploads, data synchronization, and user authentication [5][7].

Document Management SDKs: For projects involving blueprints and technical drawings, tools like Nutrient’s SDKs can be invaluable. They support real-time drawing markup, version control, digital forms for field data capture, and compliance features like redaction and audit trails [4]. For example, these SDKs have helped engineers efficiently manage blueprints by enabling markup and reducing PDF processing overhead [4].

"Great tool that covers one of our product’s core functionalities." – Dmytro H., Principal Engineer [4]

Mapping and Spatial Analysis Tools: Since construction projects are tied to specific locations, spatial data tools are essential. Esri‘s ArcGIS offers SDKs for platforms like JavaScript, .NET, Flutter, Kotlin, and more, along with Location Services for mapping, routing, geocoding, and data enrichment [6]. These tools are ideal for site planning, logistics, and project visualization.

Integration Platform Tools: If you’re juggling multiple API integrations, platforms that offer pre-built connectors and unified APIs can simplify the process. These tools let your team focus on core development instead of maintaining numerous API connections. Costs for these platforms range from $10,000 to $100,000 annually, with budget-friendly options for startups [2].

Understanding U.S.-Specific Compliance and Standards

Data Privacy Regulations: U.S. developers face a patchwork of privacy laws, including the California Consumer Privacy Act (CCPA) and California Privacy Rights Act (CPRA). Depending on your project, you may also need to comply with HIPAA for health data, FERPA for educational records, or state-specific laws like New York’s SHIELD Act [9]. Nearly half of U.S. states have privacy legislation in place or under consideration, adding to the complexity [9].

"Compliance is vital not just to protect user data and avoid regulatory action but also to avoid the erosion of trust in your business and backlash." – Nordic APIs [9]

Federal Data Standards: If your project involves federal funding, you’ll need to follow specific data standards. For example, the Federal Geographic Data Committee (FGDC) sets guidelines for geospatial data, while the National Information Exchange Model (NIEM) governs enterprise-wide information sharing [10]. Publicly funded projects may also need to adhere to the DATA Act of 2014, which mandates Federal Spending Transparency Data Standards [10].

Localized Data Formats: U.S. construction APIs often follow specific formatting conventions. Dates typically use the MM/DD/YYYY format, measurements are in imperial units (feet, inches, pounds), temperatures are in Fahrenheit, and currency is formatted as $1,234.56. Paying attention to these details can prevent integration hiccups.

Security and Audit Requirements: Implement detailed logging to track events, timestamps, actor IDs, and affected objects. Logs should be immutable and access-controlled. Noncompliance with security standards can be costly – data breaches increase by 12.6% to an average of $5.05 million when organizations lack proper safeguards [11].

Industry Standards Compliance: The American National Standards Institute (ANSI) establishes voluntary consensus standards that are often recognized by regulators [8][10]. For construction APIs, this may involve adhering to building information modeling (BIM) standards to ensure smooth data integration with other software systems.

Step-by-Step API Integration Process

With the necessary groundwork in place, here’s how to approach real-time data integration effectively.

Researching and Choosing the Right API Provider

Selecting the right API provider requires balancing factors like security, scalability, documentation, cost, and support.

Security should always come first. Look for providers that use robust encryption like TLS 1.3, OAuth 2.0 for authentication, and multi-factor authentication. Implement access controls with role-based permissions and secure key management through vaults and token rotation to protect sensitive data.

Scalability is crucial, whether you’re a startup building an MVP or a large company managing complex projects. Ensure the provider supports features like auto-scaling and load balancing to handle varying data volumes. As Tim Kelleher from Operational Standards shared:

"Agave captured Brinkman’s requirements and built an integration we can rely on. As project complexity increases, we needed an integration to ensure Autodesk and CMiC provide accurate data to our project teams. This integration does exactly that." – Tim Kelleher, Operational Standards

Documentation plays a key role in saving time and effort during development. Prioritize APIs with clear documentation, code samples, SDKs, and active developer communities. APIs with intuitive interfaces can significantly reduce implementation and maintenance headaches.

Cost considerations are especially important for startups. Evaluate pricing models like pay-as-you-go, subscription tiers, or volume-based discounts to find one that fits your usage patterns. APIs can help reduce internal development costs, allowing you to focus on your main product.

Reliability and support shouldn’t be overlooked. Check the provider’s uptime history, average response times, and available support channels. User reviews and testimonials can provide insights into how well the provider performs in real-world scenarios.

Technical Setup and Configuration

Once you’ve chosen an API provider, it’s time to set up authentication, versioning, and configuration for stability.

Authentication is more secure with OAuth, which uses access and refresh tokens with token rotation and granular permissions. For Microsoft-based platforms, register both client and web API applications, and configure permissions for accessing construction data and Microsoft Graph.

Versioning is critical from the start. Choose a strategy like URI versioning (e.g., /v2/projects/123), query string versioning (/projects/123?version=2), or header versioning. Stay updated with the latest versions to avoid scrambling when older ones are deprecated. Use alert systems to catch schema changes early and prevent user impact.

Rate limiting helps maintain consistent performance. Understand the provider’s limits and consider adding your own rate-limiting layer. Batch requests when possible and upgrade plans as your needs grow.

Data flow optimization requires understanding the API’s data model, URI structure, and supported formats like JSON or XML. Use pagination for large datasets (e.g., limit=25&offset=50) and enable field selection to minimize payload sizes. Compression methods like Gzip or Brotli can further improve data transfer efficiency.

With these steps complete, you’re ready to implement and test real-time features.

Implementing and Testing Real-Time Features

For real-time data streams, asynchronous processing through WebSockets or Server-Sent Events (SSE) is far more efficient than polling. Event-driven architectures using tools like Apache Kafka or RabbitMQ can decouple components and ensure immediate responses to data changes.

To maintain stable connections, use connection pools and implement retry logic with exponential backoff. Design your system to handle network interruptions gracefully, ensuring no critical data is lost.

Testing real-time features requires a thorough approach. Combine functional, performance, and end-to-end testing within your CI/CD pipeline. Tools like LoadView, JMeter, or Apache Bench can simulate real-world traffic and identify bottlenecks. Pair synthetic monitoring with real-user monitoring to gain a complete picture of system performance.

"API integrations simplify complex software development tasks. However, the teams responsible for building and maintaining integrations routinely face several challenges: API versioning, APIs that change over time, Authentication and security, Error handling, Scalability, Testing, Rate limiting, Documentation and knowledge transfers." – James Walker, @Merge

With real-time features in place, the next step is to fine-tune for performance and scalability.

Optimizing for Performance and Scalability

After setting up real-time functionality, focus on optimizing performance to handle growing demands.

Start by monitoring performance around the clock with tools like Prometheus, Grafana, or New Relic. Track key metrics like response times, throughput, and error rates. Set up precise alerts to catch genuine issues while avoiding alert fatigue.

"Measure, measure, measure, trace, measure, measure. This is it. I’m a performance specialist and measuring is the key." – Izacus, performance specialist

Caching can greatly improve performance for frequently accessed data. Use server-side, client-side, and CDN caching solutions like Redis or Memcached. Cache commonly requested data, such as project details, while keeping sensitive information secure.

Database optimization is another critical step. Index frequently accessed fields, simplify complex queries, and use connection pooling to reduce overhead. For construction applications, proper indexing can significantly speed up query times.

When planning for scalability, horizontal scaling is often more effective than vertical scaling. Adding more servers (horizontal scaling) provides better fault tolerance and cost efficiency compared to increasing server capacity (vertical scaling). Use load balancing to distribute requests across servers, and consider adopting a microservices architecture for independent scaling of components.

| Aspect | Horizontal Scaling (Out) | Vertical Scaling (Up) |

|---|---|---|

| Method | Add more servers | Increase server capacity |

| Cost | Incremental, pay-as-you-grow | Larger upfront investments |

| Scalability Limit | Nearly unlimited | Limited by hardware constraints |

| Fault Tolerance | High (distributed system) | Lower (single point of failure) |

| Implementation Complexity | Higher (requires stateless design) | Lower (minimal code changes) |

Auto-scaling is another powerful tool. Set up auto-scaling to adjust server instances based on demand, scaling up during peak times and down during quieter periods. This approach is especially useful for construction projects, which often see usage spikes during specific phases.

As one developer wisely put it:

"Build with general good practices for your initial version of the application and monitor to see where you actually need it to be more performant or scalable and make your changes then." – mikevalstar

Start with a basic setup, monitor usage, and expand as needed. This strategy keeps costs manageable while preparing your system for future growth.

sbb-itb-51b9a02

Addressing Key Integration Challenges

Even with the best setup and fine-tuning, developers often face hurdles when integrating real-time construction data APIs. Knowing these challenges and having effective strategies to tackle them can save time and avoid costly errors.

Handling Data Synchronization Issues

Data synchronization problems like latency, conflicts, and inconsistencies are common in real-time integrations. For example, polling retrieves new data only 1.5% of the time, leading to wasted resources and delays[12].

To address this, many organizations are turning to Event-Driven Architecture (EDA). In fact, over 72% of global organizations now use EDA to power their systems and processes[14]. As SAP describes:

"Event-driven architecture (EDA) is an integration model that detects important ‘events’ in a business – such as a transaction or an abandoned shopping cart – and acts on them in real time."

This approach ensures near-instant updates when project statuses change, materials arrive, or safety issues arise.

Another key method is Change Data Capture (CDC), which replicates high-volume data with minimal impact – only a 1–3% load on source systems[14]. Jeffrey Richman highlights its value:

"Change Data Capture isn’t just a modern alternative to batch ETL – it’s a foundational capability for organizations that need to move fast, stay in sync, and make decisions in real time."

In addition, enforcing strict data validation can catch errors early. For instance, ensuring material quantities are non-negative, dates align with project timelines, and costs stay within budgets can significantly improve data accuracy.

Here’s a quick comparison of periodic refresh and event-based synchronization:

| Aspect | Periodic Refresh | Event-Based Synchronization |

|---|---|---|

| Timing | Regular intervals | Real-time, triggered by events |

| Latency | Higher (minutes to hours) | Lower (seconds to milliseconds) |

| Resource Usage | Batch processing, efficient | Continuous processing, higher demand |

| Cost | Lower operational costs | Higher infrastructure costs |

| Complexity | Simple to implement | Requires event infrastructure |

| Best For | Reporting, analytics, backups | Live dashboards, fraud detection |

| Failure Recovery | Retry entire batch | Individual event recovery |

With synchronization challenges addressed, the next step is ensuring your system can handle growing data volumes.

Ensuring Scalability

Once synchronization is under control, scalability becomes a top priority. The construction industry generates massive amounts of data – 147 zettabytes in 2024, up from 64 zettabytes in 2020, with projections tripling by 2027[16]. To manage this growth, horizontal scaling is key. Adding servers to distribute workloads offers virtually unlimited scalability and boosts fault tolerance. However, many cloud resources are over-provisioned by 30–45%[13], so optimizing your infrastructure based on actual usage can help cut costs.

Martyn Davies, Developer Advocate at Zuplo, emphasizes this balance:

"Truly scalable API products balance immediate needs with future potential, creating interfaces that accommodate explosive growth without requiring costly rebuilds."

Auto-scaling is particularly useful for predictable traffic patterns. By dynamically adding or removing server instances based on demand, you only pay for what you use. Additionally, strategic caching can reduce API calls by up to 70% by storing non-volatile data like project details and material specs, easing system load and improving response times[15].

Event-driven architecture also supports independent scaling of services. Pairing this with database optimizations – such as indexing, connection pooling, and data partitioning – ensures your system remains responsive, even as data volumes soar.

While scalability ensures systems can handle growth, security measures are essential to protect them from threats.

Mitigating Security Risks

Security is a critical concern for API integrations, especially since APIs account for 71% of all web traffic, and 84% of security professionals reported at least one API-related incident in the past year[17]. A strong security foundation begins with robust authentication and authorization. Using OAuth 2.0 with PKCE and securely implemented JWTs ensures only authorized users can access data, while Role-Based Access Control (RBAC) limits access based on user roles.

Data encryption is non-negotiable. Use TLS 1.2 or higher for API communications and AES-256 encryption for sensitive data storage. Combine this with rigorous input validation – such as whitelisting and JSON schema validation – to prevent injection attacks and other vulnerabilities.

To guard against abuse and denial-of-service attacks, implement rate limiting and throttling. Algorithms like the Token Bucket or Leaky Bucket can help manage traffic, ensuring the system remains stable during spikes.

Continuous monitoring is another must. Detailed logging and real-time analysis through SIEM systems can identify unusual activity, such as after-hours access or unexpected data downloads. For U.S.-based construction projects, aligning with standards like PCI DSS, NIST CSF & SSDF, and ISO 27001 strengthens your security posture.

Frontegg captures the essence of API security:

"API security is not just about reducing risk. It’s about unlocking speed and control without piling more work on developers."

Regular security testing, such as penetration testing, along with keeping libraries, dependencies, and API versions current, is essential for maintaining a secure and reliable environment.

Best Practices and Maintenance

Getting your real-time construction data APIs up and running is just the first step. The real challenge lies in keeping them functioning smoothly over time. To ensure your integration remains reliable, secure, and efficient, you’ll need a structured plan for maintenance, updates, and optimization. Let’s dive into the key practices that can help you achieve long-term success.

Regular Updates and Version Management

Managing API versions is a cornerstone of maintaining stable systems. As Werner Vogels, CTO of Amazon, famously said:

This underscores the importance of having a solid versioning strategy to avoid disruptions and ensure backward compatibility.

A widely used approach is Semantic Versioning (MAJOR.MINOR.PATCH), which clearly communicates the type of changes being introduced – whether they involve breaking updates, new features, or bug fixes. Tom Preston-Werner, the creator of Semantic Versioning, puts it simply:

"Semantic versioning is a simple set of rules and requirements that dictate how version numbers are assigned and incremented." [18]

Many providers, like Procore and Autodesk Construction Cloud, maintain multiple API versions simultaneously. This gives users time to adjust to updates without rushing. To stay ahead, set up automated notifications for deprecation warnings and major updates, which are often announced 6–12 months in advance.

Automating Testing and Validation

As your API integration grows more complex, manual testing becomes inefficient. Automated testing is essential, especially for real-time data integrations, enabling faster and more reliable validation [20][23].

Studies like the State of Testing in DevOps Report highlight that automated API testing significantly improves user satisfaction [22]. This is particularly critical in construction, where accurate data can directly impact project decisions and safety.

An effective testing strategy should include:

- Unit and Integration Tests: Catch issues early when they’re easiest to fix [20][22][23].

- Comprehensive Endpoint Testing: Cover all scenarios, including errors like 404s, 500s, and invalid inputs [20][21][23].

- Schema Validation: Use tools like JSON Schema or OpenAPI specifications to ensure data formats and parameters are accurate [21][24].

- Contract Testing: Tools like Pact can confirm that API providers and consumers are aligned, preventing unexpected breaks in functionality [21].

Teams that invest in automated testing often see a 40% drop in support tickets related to API issues [25][28]. This frees up resources for new feature development instead of troubleshooting.

Documentation and Team Knowledge Sharing

Clear, up-to-date documentation isn’t just a nice-to-have – it’s a necessity. Poor documentation slows development, while well-maintained, version-controlled documentation speeds up onboarding and reduces support requests [25].

To keep your documentation effective:

- Document as Code: This allows for version control, automated validation, and collaborative updates [25][28].

- Include Key Details: Cover endpoint descriptions, request parameters, authentication methods, response formats, error codes, and real-world use cases [25][26][27].

- Focus on Specific Workflows: For construction APIs, document workflows like project creation, material tracking, and progress reporting.

You can also establish a Documentation Champions Program, where team members from different departments advocate for and review documentation practices. This prevents knowledge from being siloed and ensures everyone stays informed.

Regularly review your documentation – every 3–6 months – and use version control to track changes. This helps maintain context as your API evolves and supports a smoother integration process for all teams involved.

Leveraging AlterSquare‘s Post-Launch Support

Maintaining real-time construction data APIs can be resource-intensive. AlterSquare offers a Post-Launch Support program to help teams manage these challenges. Their services include continuous monitoring, automated testing upkeep, and strategic advice tailored to your API integration.

AlterSquare’s engineering-as-a-service model is particularly valuable for complex scenarios like multi-vendor API orchestration or updating legacy systems. Their structured framework ensures every maintenance task follows a rigorous process – mirroring the care put into the initial development phase.

For startups, AlterSquare’s 90-day MVP program can transition into long-term support arrangements. This ensures your integration scales effectively and adapts to changing project demands. Support plans are customized to match your product’s growth stage and technical needs, offering flexibility as your system evolves.

Conclusion

Integrating real-time construction data APIs is a game-changer for developing competitive construction tech products. By following a structured approach – choosing secure API providers, implementing robust OAuth 2.0 authentication, and continuously monitoring performance – you can ensure a smooth and reliable data flow that supports fast, informed decision-making.

Real-time data integration reshapes how construction teams work. Instead of waiting hours or even days for updates, teams can instantly adjust bids, schedules, and resource allocations. This level of responsiveness not only enhances client satisfaction but also helps position your product as a leader in the highly competitive U.S. construction market.

However, realizing these benefits requires tackling integration challenges head-on. Issues like synchronization, scalability, and security can be addressed with strategies such as precise timestamp synchronization, scalable cloud-based infrastructure, and secure authentication protocols. Regular monitoring and robust error-handling mechanisms – like exponential backoff – help maintain reliability as your system grows.

For startups, mastering these technical fundamentals is especially critical. Expert support can make a significant difference here. AlterSquare’s 90-day MVP program and engineering-as-a-service model simplify complex integrations, making it easier to manage multiple APIs and modernize outdated systems.

AlterSquare’s delivery framework – spanning discovery, strategy, design, agile development, launch preparation, and post-launch support – provides a clear roadmap for successful API integration. This methodical approach minimizes costly mistakes and accelerates your product’s time-to-market.

As the construction industry continues to embrace digital transformation, real-time API integration will set your product apart. Teams that invest in thorough implementation today, with expert guidance when necessary, will be better equipped to seize market opportunities and deliver the responsive, data-driven tools that modern construction professionals expect.

FAQs

What security measures should developers prioritize when integrating real-time construction data APIs?

To keep real-time construction data APIs secure, start with strong access controls such as OAuth 2.0. This ensures that only authorized users can access the data. Additionally, encrypt data both during transmission (using TLS) and when stored to shield sensitive information from potential breaches or interception.

It’s also important to perform regular security audits and keep an eye out for unusual activity. This proactive monitoring helps identify and address vulnerabilities before they become bigger issues. Lastly, adhering to industry standards and best practices is key to creating a secure API environment. These measures not only protect construction data but also help maintain user trust in your application.

What steps can developers take to ensure their API integration scales effectively as data volumes grow?

To make API integration work smoothly as it grows, developers should focus on scalable architecture principles. This means using techniques like horizontal scaling, load balancing, and caching to manage larger data loads effectively. These strategies ensure the system can handle increased demand without breaking a sweat.

Equally important is having centralized monitoring and logging in place. This helps keep tabs on performance and makes troubleshooting issues much faster and more efficient.

When it comes to managing data, prioritize efficient data handling. Avoid transferring unnecessary data, use queuing systems to tackle heavy workloads, and fine-tune API requests to reduce strain. By designing APIs with flexibility and future growth in mind, you can ensure they remain reliable and high-performing as project needs evolve.

What are the best practices for keeping API integrations updated and reliable over time?

To keep your API integrations running smoothly and up to date, start by incorporating automated testing into your CI/CD pipelines. This approach catches errors or changes early, helping you maintain consistent functionality. It’s also crucial to regularly update and review your API keys and tokens to uphold strong security measures. Clear and thorough documentation of your integration code can save time when it comes to updates or troubleshooting.

For long-term stability, adopting API versioning can help you manage breaking changes effectively. Pair this with periodic code reviews to ensure your integration remains reliable and scalable. These proactive steps can minimize disruptions and keep your systems operating seamlessly.

Leave a Reply